Privacy and ethical concerns in Learning Analytics

Rédigé par Régis Chatellier

-

02 février 2018Why should we remain alert to digitalization and new techniques in education?

LINC was invited to participate in a panel organized by MatHiSiS project, Robots in the classroom, new learning visions in the technological era, as part of CPDP 2018 (11th International conference - Computer, Privacy and Data Protection, in Brussels). One of the key questions to be answered had been identified by Gary T. Marx in his book, Windows into the Soul: “Will the habit of technology surveillance at school somnolently create dependency, so that learners of today will be the docile subjects of surveillance tomorrow?” An opportunity for LINC to discuss privacy and ethical concerns in Learning Analytics.

Digitalization of education

Digitalization of education comes with emerging learning methods. Teachers and pupils are now using new devices, such as computers, tablets, smartphones or even robots (for example, the open source Robot Thymio); these devices interact with e-learning platforms, Moocs, and social media.

As a result, learning analytics have emerged as a new discipline. In fact, digitalization and learning analytics come with new types of data processing operations which are based a massive analysis of personal data concerning children, students or employees. Such data ranges from socio-demographic data (age, gender, level of education, language, etc.) to statistics (number of clicks, time and frequency of utilization, response time), or from performance indicators (such as test results) to behavior (interactions with the machine, or between individuals). The data may be used, for example, to test different types of exercises with pupils, to analyze their response time, to personalize their curriculum, to give them feedback, etc.

More and more data

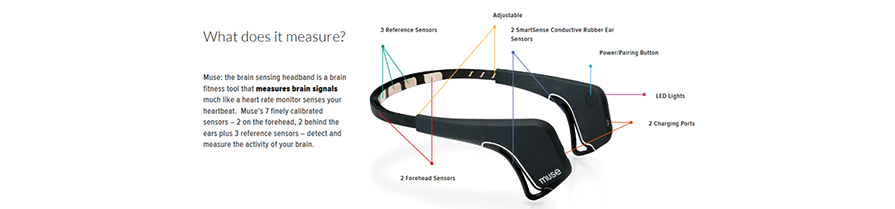

These data seem to be relatively “ordinary”. However, we could collect more sensitive data in the future, using new sensors. In our report “Data, muses and frontiers of cultural creation” published in 2015, we explore the future of creative industries and the use of recommendation algorithms in video and music streaming, digital reading and video games. Videogames could drastically evolve as new sensors will be used to adapt and personalize the experience.

Various tools could have a significant interest in the field of education and learning analytics, such as headbands to measure brain signals, temperature sensors to evaluate the stress level, or cameras for emotion analysis. In this way, it would be easy for data controllers to understand more accurately the way people are using their brain… and in the end, their psychology.

For example, Hypermind project is a proactive school manual which uses our eyes as interfaces to analyse cognitive behaviours and generate heat maps.

When the student is reading more slowly than usual, meaning that the content can be difficult, the manual can offer additional information. It can adapt to subjective needs and skills: « When learner is reading the book, the book is reading the learner ».

Privacy concerns

These new types of data processing operations raise privacy concerns. First of all, there is a risk that data would be reused for commercial purposes, and of course, the impact on individuals could be very significant in the event of a data breach. In addition, processing children’s data raises specific privacy issues. Indeed, consent for the processing of children’s data must meet specific requirements, laid down in article 8, “the controller shall make reasonable efforts to verify in such cases that consent is given or authorized by the holder of parental responsibility over the child, taking into consideration available technology”. In practice, this means parents must be aware of the services and tools used at school, and they need to understand their respective privacy policies in order to give their consent by “a clear affirmative act establishing a freely given, specific, informed and unambiguous indication of the data subject's agreement to the processing of personal data”. Parents’ consent may also be related digital literacy education: if parents do not consent, how will their children learn how to use and manage digital services? Besides, data are becoming increasingly sensitive as learning analytics data are added to the existing school records; and since learning analytics promise to predict failure or to detect pupils at risk of dropping out of schools, it is becomes also essential to stress the need for transparency and the risks of discrimination that could arise from biased and fallacy analytics.

In the long term, we could also imagine that data and psychological profiles would be used for recruitment or insurance purposes. Some companies have already tried to adapt their insurance policy on the basis of psychological profiles inferred from Facebook profiles: in 2016, Admiral had determine risky profiles of young drivers based on their behavior on Facebook.

Ethical Concerns

If the GDPR is suited to respond to the privacy concerns described above, overuse of learning analytics could raise ethical concerns. Robotizing educational and professional paths in order to spot risks could lock these children in « bubbles of failure », so that data produced when they are kids would undermine them throughout their entire life.

Finally, CNIL published a report in December on Ethic, algorithms and artificial intelligence in which we highlight the importance of fairness, as well as constant awareness. These two principles can be useful in the field of education, so that new techniques and innovations benefit to pupils and students, and continue to protect their fundamental rights.

Illustration

"Computopia", 1969, Shōnen Sunday magazine