Foray into the security toolbox of large language models (1/2): targeted risks and security by design

Rédigé par Alexis Leautier

-

22 October 2024At a time when conversational agents are multiplying, the security of personal data processed via these interfaces appears to be a major issue. This confidentiality issue is twofold in that it concerns both data submitted by a user and the outputs produced by an interface. To address this issue, manufacturers now offer numerous techniques, with disparate advantages and limitations. These techniques should be mapped.

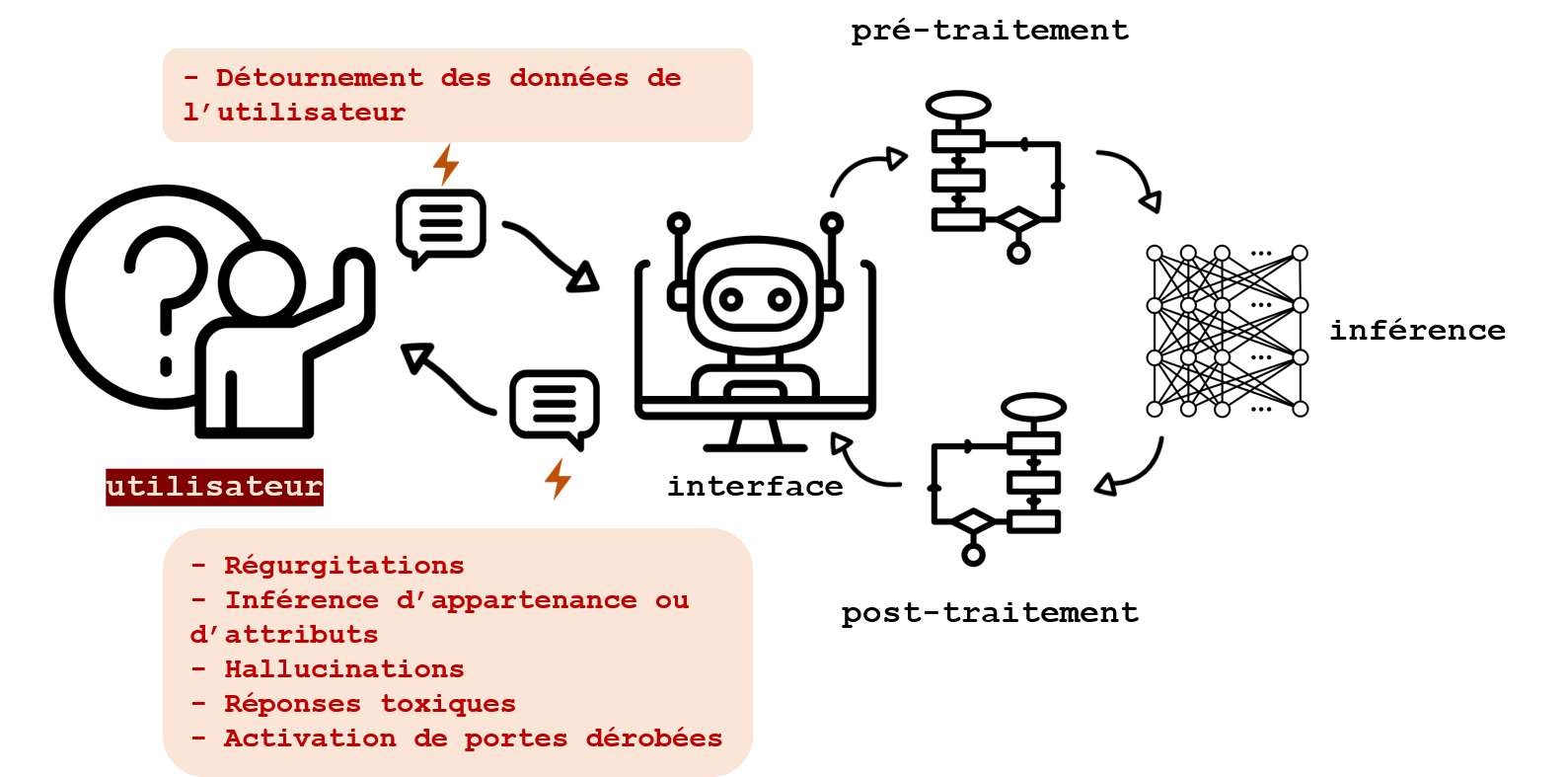

The use of conversational agents involves three categories of specific risks for personal data:

- reconstruction of training data during use,

- the processing of the data provided in the prompt of these tools,

- as well as outputs that can sometimes directly concern an individual.

In view of the uses that are currently observed (from the synthesis of medical documents to artificial girlfriends), the need to protect the data entered in prompts seems to make sense. Risks relating to outputs are also already observed when an agent is questioned about an individual and generates false, and sometimes toxic, information about them. Finally, given that training data can be stored by AI models during their training, the protection of this data when using the models raises questions. Solutions, such as anonymisation of training data or machine de-learning, exist in order to limit these risks upstream of the deployment of a system, but they have limitations. Where training data cannot be anonymised due to, for example, deterioration of the performance of the model, or where the solutions envisaged do not provide the desired level of security, the measures that can be activated when using the model may be an additional guarantee for a more secure deployment.

These two articles focus on Large Language Models, or LLM, but some of the techniques used could extend to other categories of content, as well as to multimodal systems. In this first article, the risks that these techniques attempt to reduce, as well as the security measures relating to the design of the system, are addressed by focusing on issues related to the protection of personal data. The second article identifies the techniques observed to secure the systems when they are used. It details some of their abilities and limitations as described in the academic literature.

What risks are we responding to ?

Through operations carried out on the model itself, on the input data or on the responses of the LLM, the security measures apply in many cases of use. However, the risks assessed below concern only the protection of personal data, whether processed for training or during use.

Disclosure of personal data

By regurgitation

First, the outputs produced by the LLM (or generations) may contain personal or confidential data from the training data. This generation may be similar to a reproduction when this information is present in the learning game and has been stored during the learning, as shown in particular by Carlini et al., 2021, or by the reproduction of New York Times articles by ChatGPT, as reported by The Verge. This is referred to as regurgitation. This category of risk can have significant consequences on the confidentiality of personal data, particularly when a LLM has been trained on sensitive data or data whose disclosure could have serious consequences for individuals.

By membership inference or reversal of model

The risks associated with the protection of personal data do not stop at regurgitation. Some attacks, such as Membership Inference Attacks, or model reversal, can make it possible to disclose information about the individuals whose data is used for training. These attacks are described in detail in the article “Petite taxonomie des attaques des systèmes d’IA” (Small taxonomy of AI system attacks). They could make it possible to reconstruct a medical letter used for training, or even to learn that an individual suffers from a particular disease specific to the medical cohort to which he or she belongs.

The insertion of back doors

Another category of attacks concerns the insertion of backdoors during training. For an LLM, it is a question of teaching it to reproduce a particular behaviour such as the generation of particular text by training it on data chosen or designed for it. These attacks are generally not intended to access training data but rather to circumvent a security mechanism or induce the user to disclose certain information. They are often undetectable in the normal behaviour of LLMs (hence their name) but can be activated when a certain query is provided, as illustrated by Rando and Tramèr, 2024 in the case of data used for the RLHF (reinforcement learning from human feedback). They can be based on the generation of malicious code, which once executed will give the attacker access or provide the attacker with confidential information such as access tokens. Other more generalist attacks can generate malicious text inviting the user to share confidential information.

Generating hallucinations

The production of false information is sometimes due to its presence in the training corpus, but it may also be due to the purely probabilistic nature of the functioning of LLMs. When the model generates a factually false response by selecting the most likely sequence of tokens, we talk about hallucinations. These may pose a privacy problem when the information generated concerns natural persons. This risk was notably illustrated recently when a journalist was attributed by Copilot's AI to crimes he had investigated, as reported in this article in The Conversation.

Generating toxic responses

LLM outputs may also not meet the expectations of deployers and their users, and may offer hateful, violent or extremist content. The production of this content may be due to two main causes:

- The presence of similar content in the training set leading the model to reproduce it during use,

- An express request from the user to generate such content. This request can be made directly, or via the use of processes to circumvent the protections put in place: in this second case, it is called a “jailbreak”, as illustrated by Perez and Ribeiro, 2022 or Wei et al., 2023.

These generations can be used to produce mass content for dissemination on social networks, contribute to the communication of extremist actors, or facilitate certain malicious acts (such as the production of malicious computer code). They can also affect a person’s reputation when they concern an individual.

Misappropriation of the data provided in the prompt

Finally, the risks may be related to the disclosure of protected data in the tool prompt. This information could be retrieved by the system deployer for malicious purposes, or misappropriated from its primary purpose. In general, this protected data is at risk when it is shared with players who do not have to have access to it, in particular because these players are then in charge of the security of the information provided to them. The security of the input data provided to agents is thus also within the scope of our study.

Each of these risks is referred to in this article; however, since the angle chosen relates to the technique and not to the risks, only some of the solutions allowing for protection against it will be addressed. Among these techniques, a distinction can be made between those relating to

- the security of the system as a whole: detailed in the rest of this page,

- queries (the input data entered in the agent’s prompt): detailed in the second article,

- the outputs produced by the LLM: also detailed in the second article.

The importance of chatbot security

The corrective measures to be applied when using the tool, i.e. at the last moment, are promoted by the manufacturers and have a certain interest. However, some techniques are applicable from the design phase of the system and their usefulness must not be neglected. The rest of this article lists these techniques, without seeking to detail them or assess their effectiveness.

There is no shortage of diversity in this field, as evidenced by the surveys carried out by Patel et al., 2024, Yao et al., 2024, Chawin or Yan et al., 2024. However, these measures generally present disadvantages related to the performance of the model obtained, their cost (in terms of resources and skills), or governance (only model developers will generally be able to implement or propose them). Moreover, most of these solutions do not provide any formal guarantees, which can also be attacked, although they eliminate a large part of the risks in an ideal scenario. Thus, filtering techniques are of interest since they are solutions that can be added to a shelf model, and can be implemented more easily by deployers, in particular thanks to open tools.

Measures relating to training data, such as

- anonymisation, by means of differential privacy, for example, or

- data cleansing, aimed at deleting non-relevant directly identifying data, or avoiding storage by deduplication of data, for example;

Measures relating to the model architecture :

- Yao et al., 2024 provides a list of publications that tend to show that certain models are more robust to attacks (including jailbreaks) and more suited to the use of differential privacy during learning, or that the use of knowledge graphs or cognitive architectures can improve security;

Measures related to the learning protocol used, such as

- differential privacy applied during learning, which makes it possible to obtain an anonymous model when the amount of noise added is sufficient (sometimes to the detriment of the quality of the performance of the model obtained),

- the choice of hyperparameters to limit memorisation during learning, as described by Berthelier et al., 2023,

- generalisation methods, which make it possible to strengthen robustness to attacks. These include: opposing learning, illustrated by the protocol proposed by Liu et al., 2020, which makes robustness to opposing attacks a learning objective directly integrated into the cost function,

- decentralised learning techniques (like federated learning) that allow more traceability on training data (and thus prevent data poisoning in particular),

- “off-site” adjustment (described by Xiao et al., 2023), where the model developer retains control over the pre-trained model and the deployer controls the adjustment data. This technique makes it possible to avoid both communication of the pre-trained LLM and adjustment data,

- encryption techniques that prevent the disclosure of secrets during training or complicate attacks on the model (such as homomorphic encryption, which is useful for training certain models, or secure multi-party computation).

Measures on the pre-trained model, such as

- model selection, when a pre-trained model is selected from the shelf. This choice may include criteria such as the implementation of the previous measures during its development, greater transparency in its development, better governance, or better results obtained in a benchmark (such as that proposed by the University of Edinburgh comparing the risk of hallucinations of several LLMs, or that proposed by Mireshghallah et al., 2023 comparing the propensity of several models to respect the confidentiality of the information contained in the conversation history),

- machine learning methods, to which an article from Linc was devoted, and which make it possible to erase the influence of certain data on the parameters of a model,

- reinforcement learning: still evolving, this area aims to adjust the model so that it follows certain rules without these rules being imposed as explicit constraints. They require feedback of two types:

- human, in the case of RLHF (reinforcement learning from human feedback), where the pre-trained model is adjusted by a specific protocol. This protocol may require the training of a third-party model called the reward model on a human-labelled database. In addition, new methods to overcome the reinforcement learning phase are emerging, such as Direct Preference Optimization, proposed by Rafailov et al., 2023, which incorporates alignment into the cost function used for training,

- automatic, coming from another AI, whose principle is the same as that of the RLHF, but where the feedback is produced by a model configured to follow certain principles. These principles may be contained in a “constitution”, a term introduced by Bai et al., 2022.

Measures relating to inference, such as the choice of hyperparameters such as temperature (which artificially increases the probability of generating a less likely response and thus promotes creativity at the expense of predictability).

These measures, which make it possible to deploy a secure system, are important but are not applicable in all cases, in particular depending on the functionality of the model, the categories of data processed, or even depending on the deployer’s control over the system. Thus, additional measures aimed at securing the system in which the AI model is integrated can be useful, in order to secure deployment on the fly. For more information, read on (Link).

Illustration: Danit Soh