LINC: In your work you lead a number of activities to reach out to young people, parents, teachers, and stakeholders to engage with the issues concerning algorithmic decision making in online services, such as social media. Could you tell us more about these activities and the type of actions you engage?

In 2016 we launched the EPSRC funded

Unbias project to move the discussion about privacy and human rights online beyond questions of personal data collection to the way in which this data is used for algorithmic decision making that biases the information and choices that people given when they use online services. The sub-title of this project was “Emancipating Users Against Algorithmic Biases for a Trusted Digital Economy”, highlighting that we placed the users at the centre. The key demographic of users we decided to focus on was 13 to 17 years old “digital natives” because they are heavily exposed to online services but at the same time those services have been built by adults with adult users in mind.

The project consisted of four parallel work streams. The first focused on hearing from users in their own words how they use algorithmically mediated online services, and what their concerns are. The second explored technical design elements that potentially raise bias issues in algorithmic decision making. The third used user observation studies to explore how users interact with internet services and algorithmic recommendations. The fourth focused on engaging with industry, civil-society, teachers, academic and regulatory stakeholders to translate the findings from the other work streams into educational material, and design and policy recommendations.

As part of the more general mission to raise awareness about the issues of bias in algorithmic decision making we have also pursue a vigorous agenda of engaging with the public and with stakeholder groups at events such as science festivals (e.g. Mozfest), multi-stakeholder policy forums (e.g. Internet Governance Forum), industry standards development activities (e.g. IEEE and ISO/IEC working groups) and provided evidence in response to UK and EU parliamentary inquiries.

The goal is thus to make these issues very concrete so people can actually figure out what is at stake. What kind of tools do you rely on for this?

In order to encourage meaningful discussion with young people about their online worlds we adapted the “Youth Juries” workshops methodology that we had previously developed as part of a collaboration with the

5Rights Foundation and Prof Coleman from Leeds University during

a project on Citizen-centric approaches to Social Media analysis. The ‘juries’ are highly interactive focus groups with an explicit objective of arriving at clear recommendations regarding social media regulation. The UnBias Youth Juries were designed to take about two hours to run and in involved three main themes with a couple of tasks per theme:

- The use of algorithms : mapping your online world ; data used in personalisation ; data as currency ; personal filter bubbles

- The regulation of algorithms : presentation of a case study with discussion about who might be to blame, e.g. inappropriate content on a social media feed

- Algorithm transparency : discussion about meaningful transparency ; request for recommendations of suggestions from the participants about increasing fairness and preventing bias

In order to make the examples and presentation methods appealing to the target audience of 13 to 17 years of kids, they were co-designed with the help of an advisor group of kids from the schools that participated in our events.

As part of the mission to develop educational materials for general use, all of the materials, scenarios and guides on running the UnBias Youth Juries have been made

freely available online.

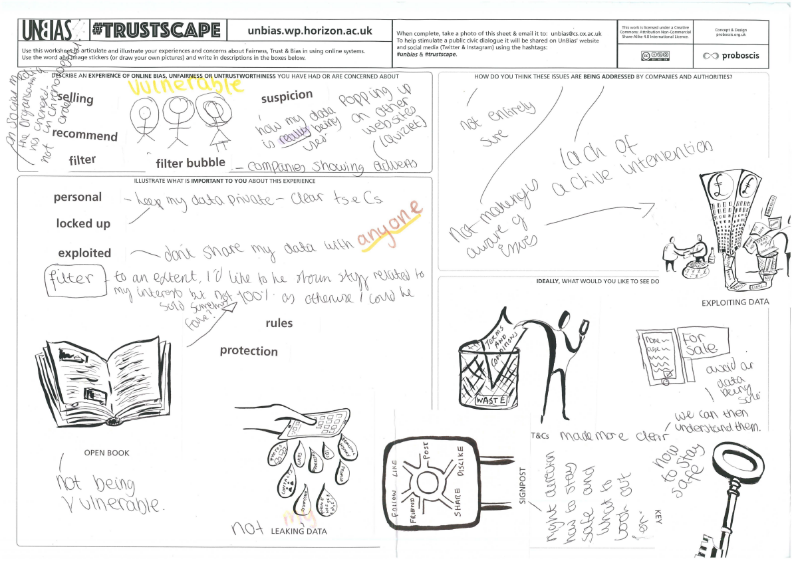

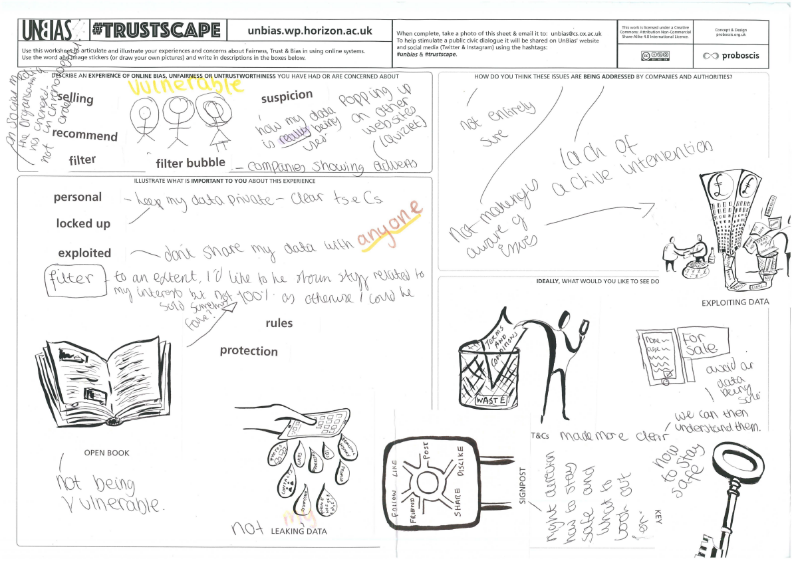

A second set of tools that was developed during the project is the UnBias “Fairness Toolkit”. Whereas the Youth Juries were very much part of the process of data collection for the project, the Fairness Toolkit was built to address the issues that were raised by the participants in our project outputs.

The Fairness Toolkit aims to promote awareness and stimulate a public civic dialogue about how algorithms shape online experiences and to reflect on possible changes to address issues of online unfairness. The tools are not just for critical thinking, but for civic thinking – supporting a more collective approach to imagining the future as a contrast to the individual atomising effect that such technologies often cause.

The toolkit consists of:

- Awareness Cards

- TrustScape

- MetaMap

- Value Perception Worksheets

The Awareness Cards are a deck of 63 cards designed to help build awareness of how bias and unfairness can occur in algorithmic systems, and to reflect on how they themselves might be affected.

The MetaMaps are designed as a tool for stakeholders in the ICT industry, policymaking, regulation, public sector and research to respond to the young people’s TrustScapes. By selecting and incorporating a TrustScape from those shared online, stakeholders can respond to the young people’s perceptions. MetaMaps will also be captured and shared online to enhance the public civic dialogue, and demonstrate the value of participation to young people in having their voice listened and replied to.

The Value Perception Worksheets provide a means of assessing and evaluating experiences of using the Toolkit. They use a matrix for exploring Motivations, Opportunities, Costs and Values, as well as other factors which cut across or link them. One sheet is intended for general users/participants, the other for ICT industry stakeholders.

The Fairness Toolkit was co-developed with the artist-led creative studio Proposcis and is

freely available online.

The Fairness Toolkit was completed in its current form in August 2018 and we are currently engaging with a range of potential users to explore the best ways to make use of these tools. Current collaborations include: developing a curriculum module for undergraduate student at

SOAS; work with schools in Nottingham to refine the use of the tool by teachers, parent, and for peer-led activities by high-school kids. We are also running workshops at events such as the V&A Design Weekend; in collaboration with the Internet Society (ISOC-UK) and are exploring a collaboration with the

IIFT to see how our tools could contribute to digital literacy education in India. If anyone is interested, we are open to exploring possible uses or adaptation of the tools in other national or organisational contexts.

Among your various activities you are also chairman of the IEEE P7003 working group that aims at developing standards for algorithmic bias considerations. Could you tell us more about the goals of setting such standards?

The IEEE P7003 Standard for Algorithmic Bias Considerations aims to provide a framework to help developers of algorithmic systems, and those responsible for their deployment, to identify and mitigate unintended, unjustified and/or inappropriate biases in the outcomes of the algorithmic system. The standard will describe specific methodologies that allow users of the standard to assert how they worked to address and eliminate such bias in the creation of their algorithmic system. This will help to design systems that are more easily auditable by external parties (such as regulatory bodies). The work on this standard started in early 2017 and is anticipated to be ready for publication towards the end of 2019 or beginning of 2020. Though the working group is chaired by me, the standard development is a multi-stakeholder collaboration involving more than 70 individuals from academia, industry and civil-society, coming from every continent, and with expertise ranging from Computer Science and Engineering to Law, Sociology, Medicine, Business and Insurance. If anyone is interest to join the working group to help establish a framework for industry best practices in addressing algorithmic bias,

feel free to get in touch. We are especially interested to hear from people from minority groups who can help us better understand types and causes of bias they may have encountered.

This work is part of the larger Global Initiative on Ethics of Autonomous and Intelligent Systems project of the IEEE (Institute of Electrical and Electronics Engineers). Why is such technical organisation interested in this subject?

The IEEE launched the “Global Initiative” in 2016 in recognition of the growing impact and importance of Autonomous and Intelligent Systems (A/IS), also known as “AI systems”, and the need to ensure that these technologies are used for the benefit of humanity. The initiative was launched with two main strands of activities: 1. the development of a public discussion document “Ethically Aligned Design: A vision for Prioritizing human Well-being with Autonomous and Intelligent Systems”, on establishing ethical and social implementations for intelligent and autonomous systems and technology aligned with values and ethical principles that prioritize human well-being in a given cultural context; 2. A series of A/IS ethics related industry standards, the P70xx series, to provide the industry with guidance on best practice for ethical use of A/IS. There are currently 14 such standards in development (see details

here).

In addition to those two initial strands of activities the IEEE Global Initiative is now looking to develop educational materials on A/IS Ethics for students and professionals and work with industry stakeholders to establish an Ethics Certification Program for Autonomous and Intelligent Systems (ECPAIS). The initial focus for ECPAIS is on delivering criteria and process for certification / marks focused on Transparency, Accountability and Algorithmic Bias in A/IS.

The transmission of knowledge on these subjects to the general public is crucial. You seem to be heavily involved in such activities. Could you tell us more about this?

As part of our work toward providing policy recommendations regarding the governance of algorithmic systems, we submitted evidence to UK parliamentary inquiries on “Impact of social media and screen-use on young people’s health”, “Lords Select Committee on Artificial Intelligence”, “Algorithms in decision-making”, “Fake News”, The Internet: to regulate or not to regulate” and “Children and the Internet”; participated in numerous policy events (e.g. EPDS and European Parliament organised symposia on AI); and established a long running connection with a group at the European Commissions’ DG Connect working on algorithmic governance issues.

At the start of 2018 we were invited by the European Parliament Research Services to prepare a Science Technology Options Assessment report on “A governance framework for algorithmic accountability and transparency”, which we presented at the European Parliament on October 25th 2018.

Another important policy activity we are currently engaged in is to support the UK Information Commissioner’s Office (ICO) with the collection of evidence as part of the process toward developing a

Code for Age Appropriate Design for Information Society Services, which is a requirement under the UK Data Protection Act 2018. The Age Appropriate Design Code is the result of an amendment to the Data Protection Act that Baroness Kidron, founder of 5Rights Foundation, was instrumental in getting introduced. The Age Appropriate Design Code will set out in law a data regime that reflects and respects the needs and rights of children and in doing so will embody the assertion of the General Data Protection Regulations (“GDPR”) that states “children merit specific protection”. It introduces, data protection by design, and meaningful choice for users under the age of 18. The Code acknowledges that children are not a homogenous group rather that their needs differ according to their development stage and context.

Further reading: