Smart City - Four scenarios for using data to restore the balance between public and private spheres

In the face of the contradictory imperatives of the smart city — personalizing everything while respecting the right to privacy, optimizing without rejecting— and in response to the new landscape, particularly the arrival of major data companies, the challenge now is to produce new models for regulating city data, ones that respect individuals and their freedoms.

This article is translated from the last part of our report : "La plateforme d'une ville - Les données personnelles au coeur de la Fabrique de la smart city".

How should data that offers powerful added value for the general interest, but is collected and used by private actors, be shared with public actors while respecting the rights of the businesses that collect and process the data as well as the rights and freedoms of the individuals concerned? This is the question that laws and public policies are currently trying to answer. Other sections in La plateforme d’une ville (The Platform of a City, available online in French only), describe how the digital city’s new services rely increasingly on personal data that is collected and processed for commercial ends by private actors.

This data, which does not fall within the natural ambit of a public service (whether directly managed, under concession, etc.), does nonetheless interact profoundly with issues of public service and can be invaluable in the delivery of a public service mission.

At present, a number of different tools are being developed by the various stakeholders in this debate. All these tools have serious limitations but also represent real opportunities. Each relies on achieving the right balance of rights and obligations between the various actors involved.

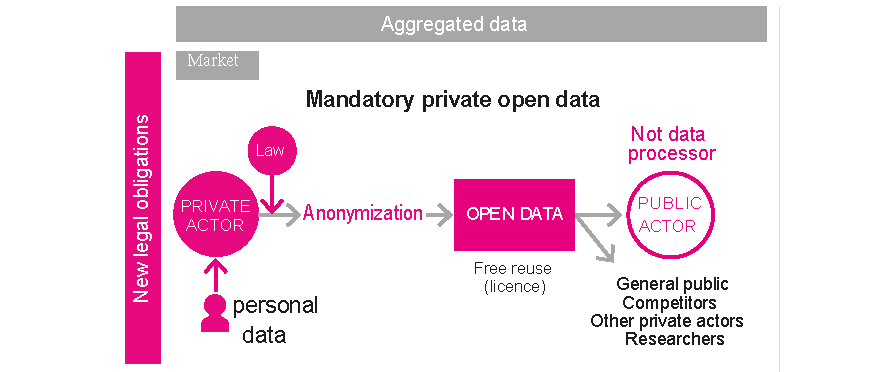

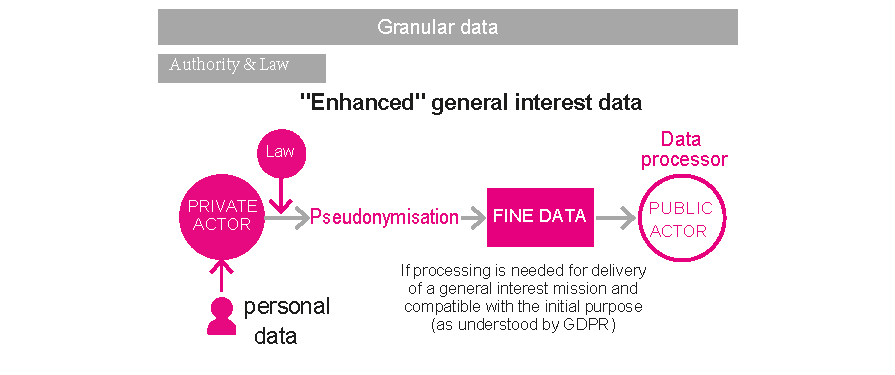

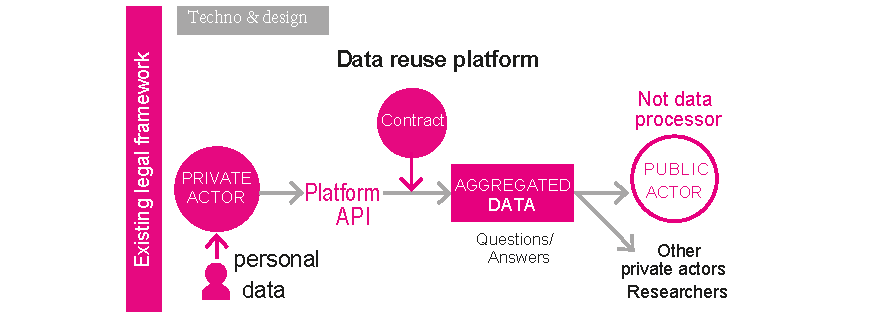

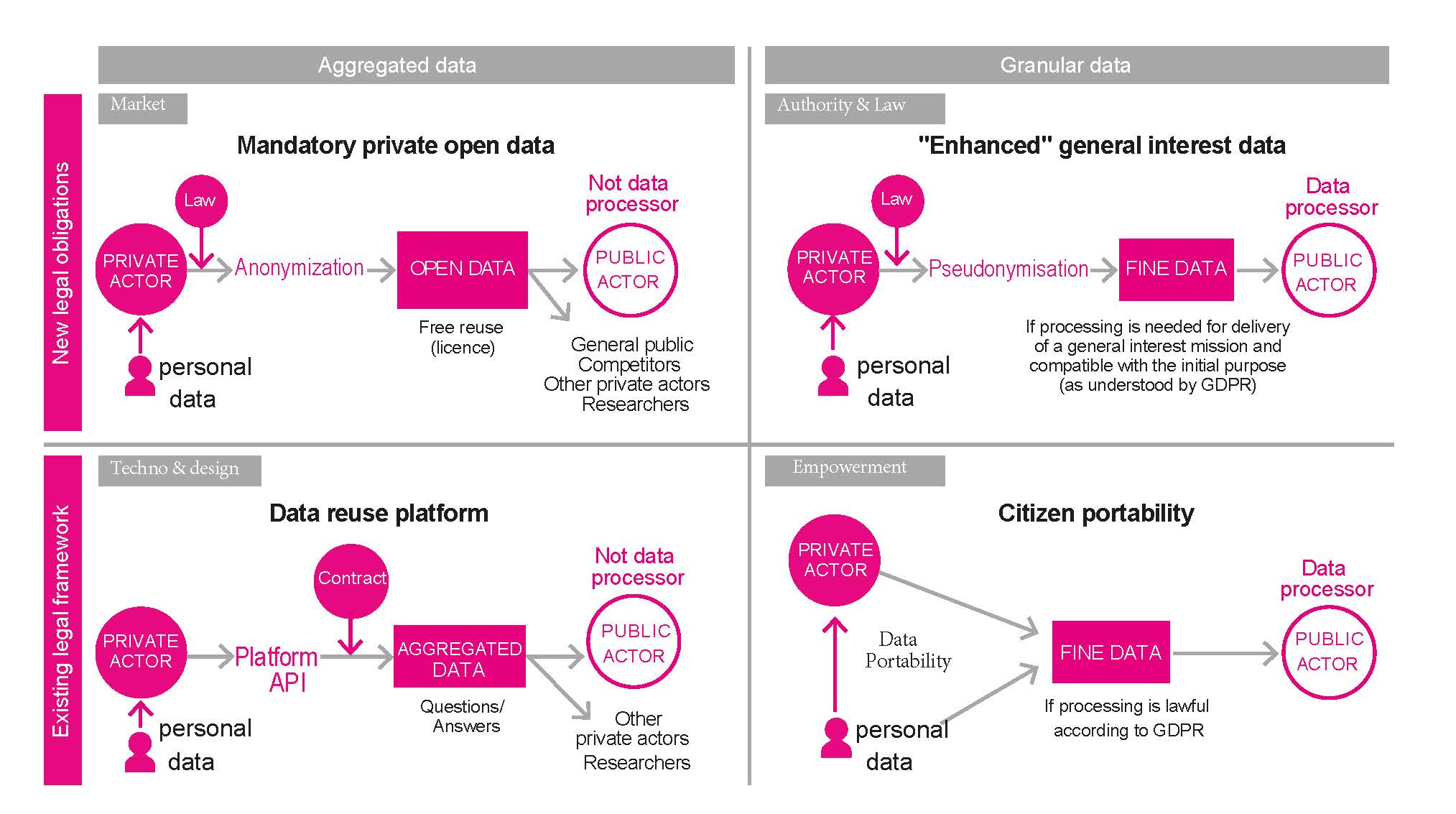

These tools can be characterized according to two features. First are the legal obligations they impose on private actors: among the four proposals described below, some could be rolled out within existing legislative frameworks whereas others would require new legislation before they could be put into practice. Then comes the question of data granularity: in some cases very fine data, including personal data, is sent to the public actor; in others, the public actor can access data only once it is aggregated and anonymized.

In a previous report, Partage !, we showed how a traditional regulatory model in isolation has little chance of being effective, and that a regulatory posture adapted to these platforms requires a new and more dynamic balance that would employ a palette of regulatory mechanisms, which would provide a range of levers to impact: the balance of power between actors (market); technical systems and architecture (technology and design); ground rules (regulator and standards); and, lastly, self-determination and returning power to the individual (empowerment). By combining the two features (legal obligations and data aggregation) with the four regulatory levers, we obtain a matrix of four distinct scenarios that represent as many possible futures, as alternatives or in combinations, for new forms of data sharing.

These scenarios offer different options for dividing the challenge of exploiting fine data and reassigning the capacity to take action in the general interest, redefining the balance of power between public and private actors within the realm of public service.

They differ in how they allocate responsibility for personal data protection, which can lie with either the private or the public actor. Whatever the scenario, the challenge is to establish best practices guaranteeing that the rights and freedoms of the people providing data are respected.

Without making any judgments about one or other of these mechanisms, setting out the basic structure of each and highlighting their potentialities serves to identify the questions raised in terms of protecting people’s personal data.

Generalizing open data for the private sector

Acting on balances of power and creating the conditions for effective self-regulation might involve setting up mandatory private sector open data policies for data with proven impact on the efficient operation of the market or policies in the general interest.

Private actors would be under a legal obligation to provide open data access to certain data they hold, for example as provided for under two French laws, the so-called “Macron Act” and the Act for Energy Transition. In order for this process to meet personal data protection requirements, in most cases implementation would involve anonymization processes that comply with certification requirements.

The advantage of this mechanism is that data can be reused without restriction, by competitors, public bodies, researchers, citizens, etc. However, it is not without its drawbacks. Anonymization comes at a price: financial for the private actor and in terms of the loss of dataset information for other users; public actors will not have access, for instance, to the very fine data that contributes to the success of general interest missions. Private actors remain in control of the quality of the datasets retrieved.

Extending general interest data beyond public services concessions

To change the ground rules is to take the view that overriding higher interests justify delineating the intangible limits society has set on ethical and political subjects. In this scenario, the issue is allowing and regulating reuse of personal data by public actors for certain purposes in the general interest, but without infringing the rights of individuals. This would involve extending the scope and modalities of the emerging notion of general interest data. Currently, general interest data is limited in France to companies operating public service concessions. It would in this scenario be extended to private actors, with the exclusion of their contract relations with public authorities.

This data is currently anonymized by the private actor prior to being made available as open data. The idea would be to open the way for certain fine data to be provided to public actors for public service missions; the public actors would then be responsible for data anonymization where it is made available as open data.

A balance of interests should make it possible to avoid harming the interests of a private actor that had invested in proprietary data processing and also to avoid violating individuals’ right to privacy, as they would have consented to data processing within the context of a specific service. Public authorities become responsible for data processing and must respect all applicable rules (legal basis, purpose limitation, compliance to all data protection principles, etc.).

Such a mechanism would offer the advantage of resetting the balance of powers between certain private actors and public authorities, which would form an effective lever for successfully accomplishing general interest missions without any infringement of the rights of individuals. The drawback with this scenario is its burdensome nature: for private businesses obliged to restitute data and for public organization users responsible for personal data protection.

This scenario has a number of backers. In the wake of France’s Act for a Digital Republic, which set out the broad lines, and the 2015 report on general interest data by the French Ministry of Economy, similar hypotheses have been developed by the European Commission in its work on the free flow of data and in Luc Belot’s report to the French parliament, which calls for the definition and identification of a “territorial interest data” category.

Permitted reuse under controm of private actors

- legal: a contract must govern what reusers may or may not do; for example, a clause prohibiting a partner from attempting to reidentify people and thereby compromise their anonymity, as well as clauses detailing how liability is to be apportioned;

- technical: real-time audits, controls, checks and log analyses to deliver dynamic risk analysis, for example to limit the chance of database inference attacks.

Engaging citizen portability

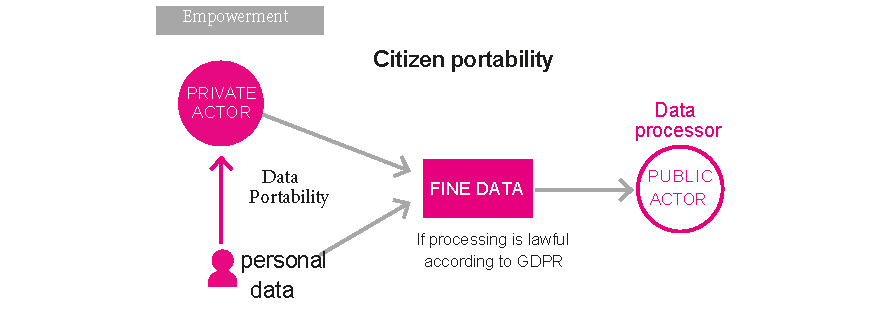

The new regulations governing personal data protection offer everybody the opportunity to determine how their data is used and empower citizens to participate in missions of general interest.

The General Data Protection Regulation (GDPR) introduces a right to data portability that promotes the reuse of personal data by a new processor, without any obstruction by the initial processor, and under the exclusive control of the person concerned. This arrangement, which will enable users to migrate from one ecosystem of services to another (competing or not) bringing with them their own data might also enable them to opt in to citizen portability to benefit general interest missions.

Communities of users could exercise their portability rights in relation to a service in order to provide a public actor with access to their data for a specific purpose relating to a public service mission. The public actor is then responsible for data processing and is therefore also required to respect the principles of data protection.

Such a mechanism would have the advantage of creating new datasets for use in public service but without imposing new legal restrictions on private actors. The drawback for this scenario is the critical mass required as widespread acceptance and participation will be needed to constitute relevant datasets. The incorporation of simplified, innovative and non-restrictive opt-in systems should help ramp up participation levels.

In a more future-forward vision, a process such as this could lead to bottom-up creation of an information commons, built by individuals in the general interest. This would entail building governance processes for the information commons, perhaps in the form of publically owned and managed local data corporations.

CNIL takes the view that any adjustments to the balance of power between private and public actors concerning city management and intended to improve public policy must go hand in hand with greater oversight of public authorities. They will be required to adhere to GDPR and specifically the notion of legitimate purpose in regard to reuse of the data provided.