Privacy Research Day 2024 follow-up and discussions

Rédigé par Vincent Toubiana

-

17 March 2025For the last three years, follow-up meetings have been organized with the participants of our annual academic conference, the Privacy Research Day. This meeting is an opportunity to present the CNIL and to have informal exchanges with participants about research projects and potential collaborations. In this article, we provide a quick summary of the ideas that emerged during these meetings.

A quick presentation of the CNIL

The Privacy Research Day aims, for the CNIL to learn about academic communities and on-going research related to data protection and regulation. The day following the conference, an informal meeting and workshops are set up between researchers who presented their work and CNIL agents. The day start with a quick presentation of CNIL’s organization and activities with a focus on enforcement actions and our research projects. CNIL’s actions are exposed, together with the challenges we face and our strategies to address them from a research perspective. This is a dense 90 minutes presentation that we are, by the way, happy to give at any time during other research lab seminars either remotely or in person [the slides of this year presentation are here].

The meeting is notably meant to build bridges with the research community and find ways to combine our activities. In 2024, the discussion actually led to several ideals: disseminating CNIL’s publications that are relevant to the research community; publishing a list of research questions as the Federal Trade Commission – often viewed as an example – did; organizing our own workshop like ConPro.

We have taken notes of these recommendations and plan to follow them in the coming months (as this website is getting an English version).

Thematic workshops

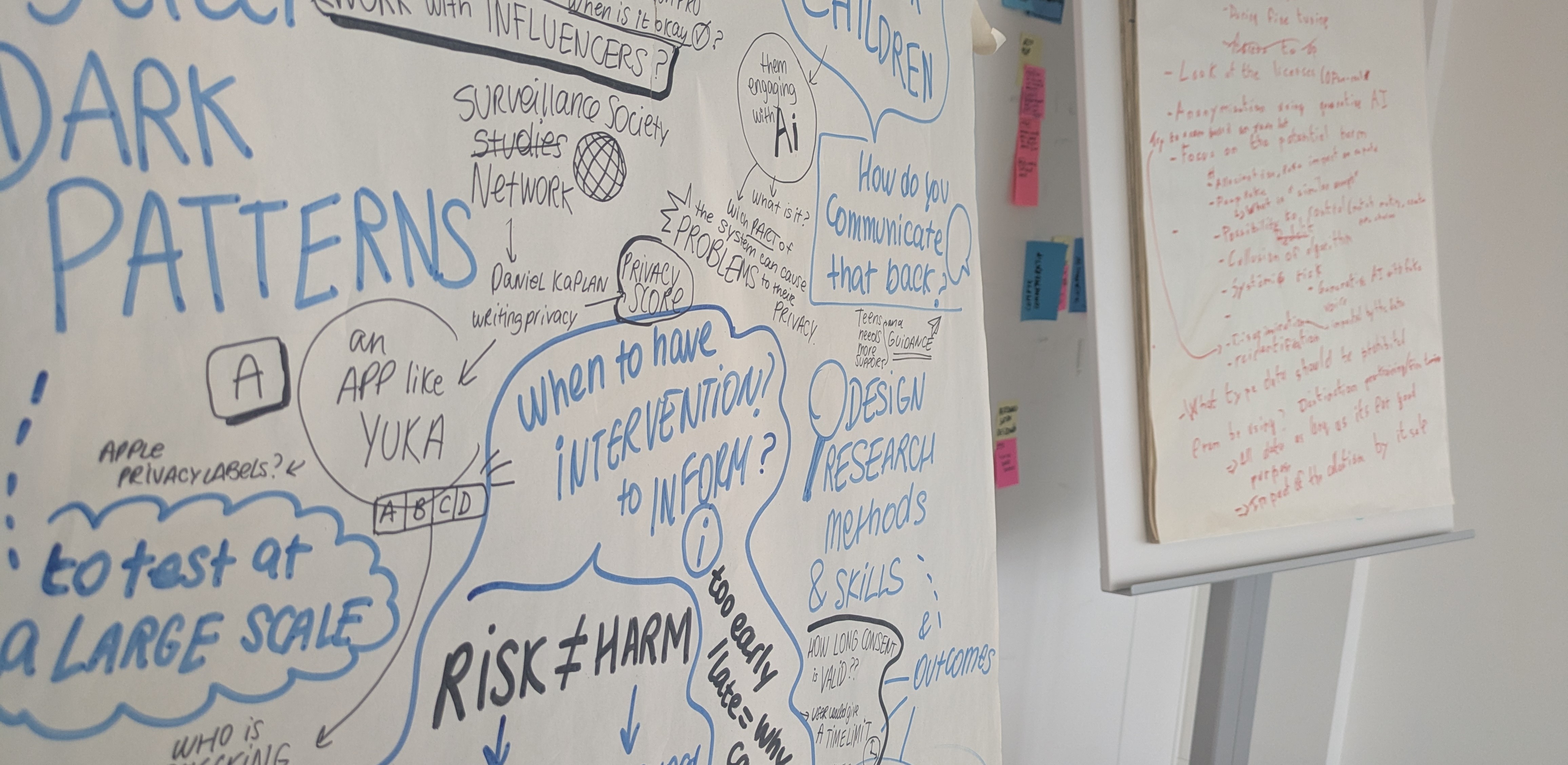

The meeting then turns into three workshops on topics that emerged during the conference. In 2024, the topics were “AI Regulation”, “Raising Awareness” and “Science for Regulation”. You will find bellow a quick summary of the main ideas that emerged during these workshops.

AI Regulation

In our discussion, we identified some challenges, and risks related to AI models and systems trained on personal data. One of the main concerns we discussed was the exercise of rights, including the deletion of specific data used in AI training, as well as the need to access algorithms to detect behaviors both after training and during fine-tuning. An idea that emerged was to examine open-source model licenses for any mentions of “Exercise of rights” or GDPR compliance, and if such provisions are absent, we propose drafting a relevant paragraph to ensure coverage.

We also explored the complex question of the types of data that should not be used to train AI, such as health, geolocation, or financial data, acknowledging that there was no simple answer. Other significant risks we noted include potential discrimination or bias, particularly against minorities, and the need to identify risks related to the misuse of AI, whether generative or otherwise.

As we could not fully address the question of data, we discussed a potential taxonomy of harmful uses of AI such as: Reputational impact (hallucination, deepfakes), Impersonation (using deepfakes, voice), Economical market failure (simplified collusions), Simplified reidentification (reidentifying text by asking a LLM), Systemic risk (impact on election with micro targeting), Manipulation (micro-generated ads with people looking like your friends), IP issues ...

At last, we discussed the use of AI in research, for example to do large scale analysis, and discussed the pros and cons of using LLMs.

Raising Awareness

The second group discussed issues and challenges related to raising awareness around privacy issues. The group considered how information could be useful for long-term education about privacy. For example, in personalized advertising, how can we effectively explain the background processes of cookie banners and remind users of what these abstract concepts mean to them? The group stressed that different communication methods were necessary for different audiences, particularly when addressing children, who may find data processes overly abstract, or teenagers, who may not feel concerned about data protection. We noted that communicating the risks and harms associated with data usage needed to be audience-specific, and that it was crucial to choose the right time to inform people, as timing affects how they perceive the importance of the issue.

In terms of solutions, empowering end-users by helping them understand the consequences of privacy-preserving actions is key. One concrete example that was mention was to show to users the impact of turning off their GPS or refusing cookies, even if they have previously accepted them We also considered the fact that,to be effective and balanced, such information should mention both the risk and the benefices of using such technologies.

Finally, we discussed the idea of giving users an overview of their digital footprint, including all the things they have consented to or subscribed to. By providing human-scale examples and using metaphors or data visualizations, we could help users understand the impact of their online actions and nudge them toward changing their habits. This approach could be inspired by ecological impact evaluations, using everyday examples to explain complex issues like data usage.

Science for Regulation

The third group explored various aspects of sharing data and competencies, focusing on how authorities and researchers can use the same datasets to help enforce regulations. Regulators would also benefit of academic expertise to use, crawl, and investigate data. The group identified several research questions, such as:

- How to provide a better understanding and interpretation of policy documents and the research questions they generate? By bridging these gaps, we aim to find policy-relevant research questions and align research efforts in line with the priorities of regulators.

- How to make these regulation priorities more accessible, particularly by sharing them in English and anticipating upcoming objectives?

One major challenge we discussed is the difference between institutional and organizational structures between academics and regulators. Academic research timelines and processes often don't align with those of regulators, creating difficulties in translating technical research into something understandable and actionable for lawyers and policymakers. Researchers typically focus on very specific topics, which may be difficult to apply within the broader legal, political, and economic constraints that regulators face. Furthermore, scientific results, even when they are robust, may not be easily generalizable. A potential recommendation would be for institutions like the CNIL/LINC to join (more) research projects and/or host PhD students to facilitate the exchanges.

In terms of promotion and networking, the group emphasized the need to demonstrate the impact of research, particularly through concrete enforcement. We wondered why research doesn’t seem to inform investigations in the way press coverage does, even though research often provides deeper insights. We proposed building a list of research papers that have informed enforcement efforts to highlight this connection. One possible explanation for the disconnect is that research papers may be too technical for broader application.

The recommendations that emerged were:

- Find ways to better explain how research can reach the regulators with findings binding with enforcement/compliance: For example, privileged contact point, and Public consultation

- Publicly show when papers are actually used in investigations so researchers can value and be recognized for their contribution (only one case so far).

- Publish a “How to” contact the CNIL as a researcher.